Every optimization provider promises maximum returns for your flexible assets, but once the contract is signed, how do you verify those claims? Without proper evaluation, asset owners are often left wondering what’s really happening in the optimization black box.

If you own flexible assets like batteries, solar, or EV fleets you already know that optimization is the name of the game. You need intelligent algorithms to trade in balancing markets, shave peaks, and shift loads.

Every optimization provider promises the same thing: maximum returns, optimized cycling, and the smartest algorithms on the market.

But once the contract is signed and the system goes live, a new, quieter problem emerges.

The "trust me" problem

How do you actually know if your optimization service is performing as well as it could?

If your report says you earned X amount yesterday, that sounds great. But could you have earned Y? Did that peak shaving event actually hit the optimal window, or did it miss by 15 minutes?

Currently, comparing optimization performance is annoyingly difficult because

- Vendors use different metrics and reporting standards

- Validating performance requires hours of manual work, wrangling CSVs, and complex spreadsheet modeling if you’re even able to export your own data

- Running an experiment equivalent to an A/B test between two optimization services is too resource-intensive

Most companies end up sticking with a vendor simply because the cost of verifying their performance is too high, and switching would take too long. You are left trusting the "black box" hoping you aren't leaving significant revenue on the table.

Proof is power

At Helicon we believe that transparency should be standard, and the playing field should be fair.

We have spent a lot of time listening to our clients, and the feedback is consistent: They need an automated way to see exactly how well their optimization strategies are performing, without needing a team of data scientists to audit the logs every morning.

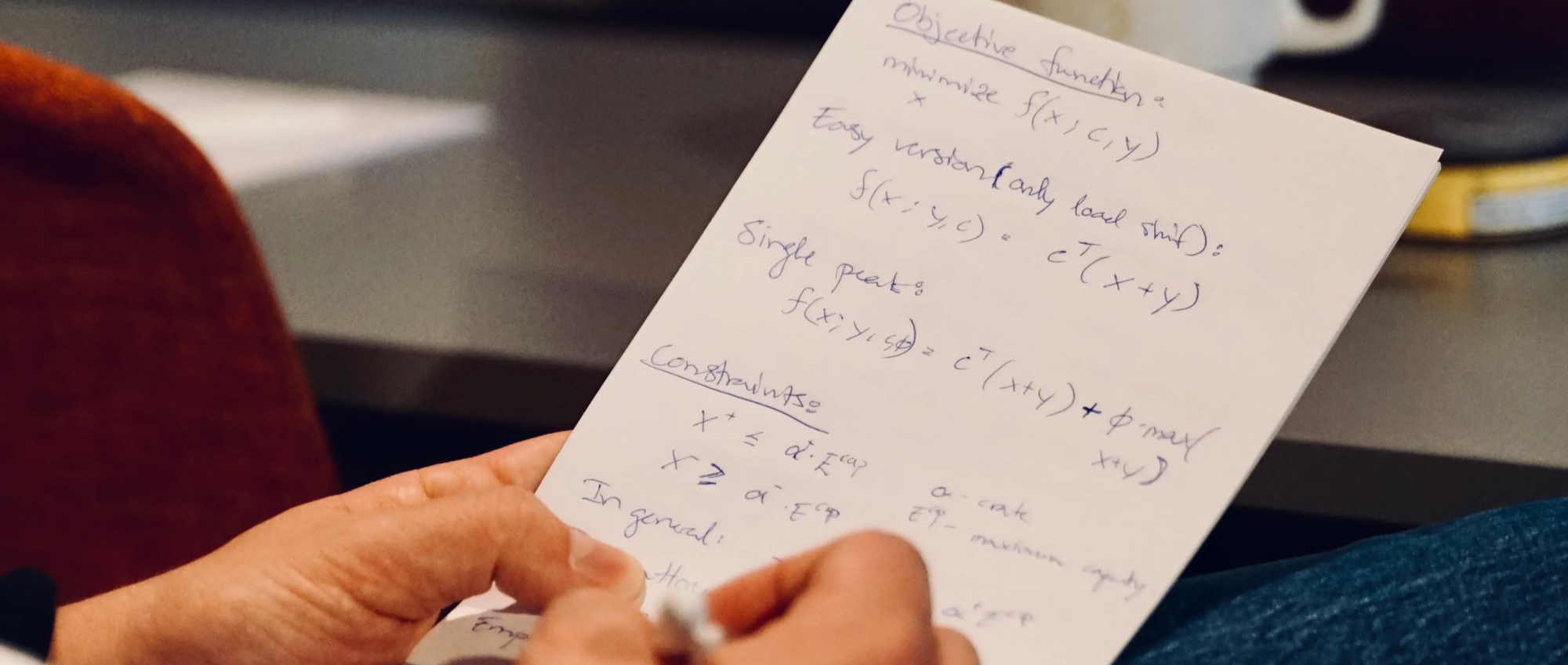

We are currently developing a new evaluation framework designed to solve exactly this.

We’re not just building another dashboard. We are building a dedicated engine for objective performance auditing and a missing market benchmark. We are designing this tool to answer the questions:

- Is this algorithm truly delivering on its ROI promise?

- Are the optimization strategies biased to increase their own rev-share over reducing energy costs?

- How does one performance stack up against a market benchmark?

- How are the optimization services delivering in other areas such as onboarding, customer support, security and transparency?

Test our framework with your own data

Do you want to see how close you are to the theoretical maximum and identify where to pivot to maximize your ROI? With our performance audit report, you receive a definitive benchmark of your actual results compared to a theoretical maximum value.

Our audit covers

→ Multi-market revenues

→ Direct costs

→ Battery management limitations

Stop guessing. Get the black-and-white truth on how your aggregator is actually performing.